GPT-Powered Voice Assistant Persona

Year

2023

Company

Native Voice AI

OVERVIEW

Role: Sole UX Researcher

Time Frame: 2 Months

Methods: Focus Groups, Workshopping, Survey, Interviews, Concept Testing, Dialogue Scripts, Day-in-the-Life Scenarios

Stakeholder Management & Cross-Functional Collaboration

How I Worked Cross-Functionally:

Executive Alignment:

Conducted stakeholder interviews with the CEO, CTO, and Head of Product to align research priorities.

Delivered a research brief mapping insights to strategic business goals.

Ran executive check-ins to ensure alignment throughout the process.

Engineering & Design Partnership:

Worked with engineers to ensure research insights were technically feasible.

Co-created concept prototypes to align research with design needs.

Facilitated collaborative analysis sessions for direct observation of user feedback.

Product Team Integration:

Established a “research steering committee” to connect research insights with roadmap planning.

Created modular research deliverables (executive summary, technical insights, design recommendations).

Ran a collaborative roadmapping workshop to translate insights into development priorities.

Strategic Impact: How Research Drove Product Decisions

1️⃣ Shifted Product Strategy

🔹 Before Research: The team considered a general-purpose AI assistant.

🔹 After Research: The team pivoted to persona-based assistants, prioritizing Rachel for launch.

2️⃣ Validated Brand Partnerships

🔹 Rachel was the best choice for V1 due to iHeartRadio & TuneIn partnerships.

🔹 Alex required more advanced integrations and was planned for Phase 2.

3️⃣ Final Roadmap & Next Steps

Phase 1: Launch Rachel.

Phase 2: Expand to Alex.

Phase 3: Explore Blair & Jay (lower-priority).

Conclusion: Research-Driven Product Development

Shifted product strategy from general AI to persona-based assistants.

Ensured user needs directly influenced voice design & functionality.

Created a research-driven roadmap that aligned business goals with user insights.

CONTEXT

Native Voice had previously built brand-specific voice assistants for companies like iHeartRadio and TuneIn, using pre-LLM technologies such as intent-based entity matching (similar to traditional assistants like Siri and Alexa). These assistants handled structured commands but lacked true conversational depth.

With OpenAI’s release of public API access to GPT, the company saw an opportunity to leverage LLMs to create a next-generation, conversational voice assistant.

Research Objectives

I led a foundational research initiative to answer:

How would users engage with a GPT-powered voice assistant in a multimodal experience?

What personas, tone, and use cases would make the assistant most compelling?

Should we develop a general-purpose LLM assistant or persona-based assistants?

How do we prioritize personas and ensure successful brand partnerships?

This research was conducted before OpenAI launched native voice functionality for GPT, making it a first-mover exploration into user expectations for LLM-driven voice interactions. Given the novelty of GPT-powered voice assistants, existing UX heuristics and usability frameworks didn't fully apply, requiring a tailored research approach that would later contribute to establishing best practices for human-AI voice interaction.

RESEARCH APPROACH

To uncover actionable insights, I conducted mixed-methods research, combining qualitative and quantitative techniques to ensure a holistic understanding of user preferences.

Participant Recruitment & Sampling Strategy

To ensure diverse representation, I recruited participants across:

Demographics: Age (18-65), gender (48% female, 44% male, 8% non-binary), income levels, and geographic distribution (urban/suburban/rural).

Technology Adoption: Balanced mix of early adopters, mainstream users, and laggards.

Voice Assistant Experience: Both power users and non-users to capture fresh perspectives.

Use Case Diversity: Participants with interests in lifestyle, research, fitness, and productivity to test potential assistant personas.

Focus Groups & Brainstorming Workshops:

Two one-hour sessions with four participants per group.

Used Miro for digital whiteboarding to map frustrations with current assistants and identify ideal features & personas.

Explored mental models around AI assistants—how users expect them to behave, engage, and provide value (tone, personality, and functionality).

📌 Key Insight: Users were dissatisfied with existing assistants (e.g., Alexa, Siri) and wanted more proactive, specialized support rather than a generic AI that “tries to do everything.”

2. Survey

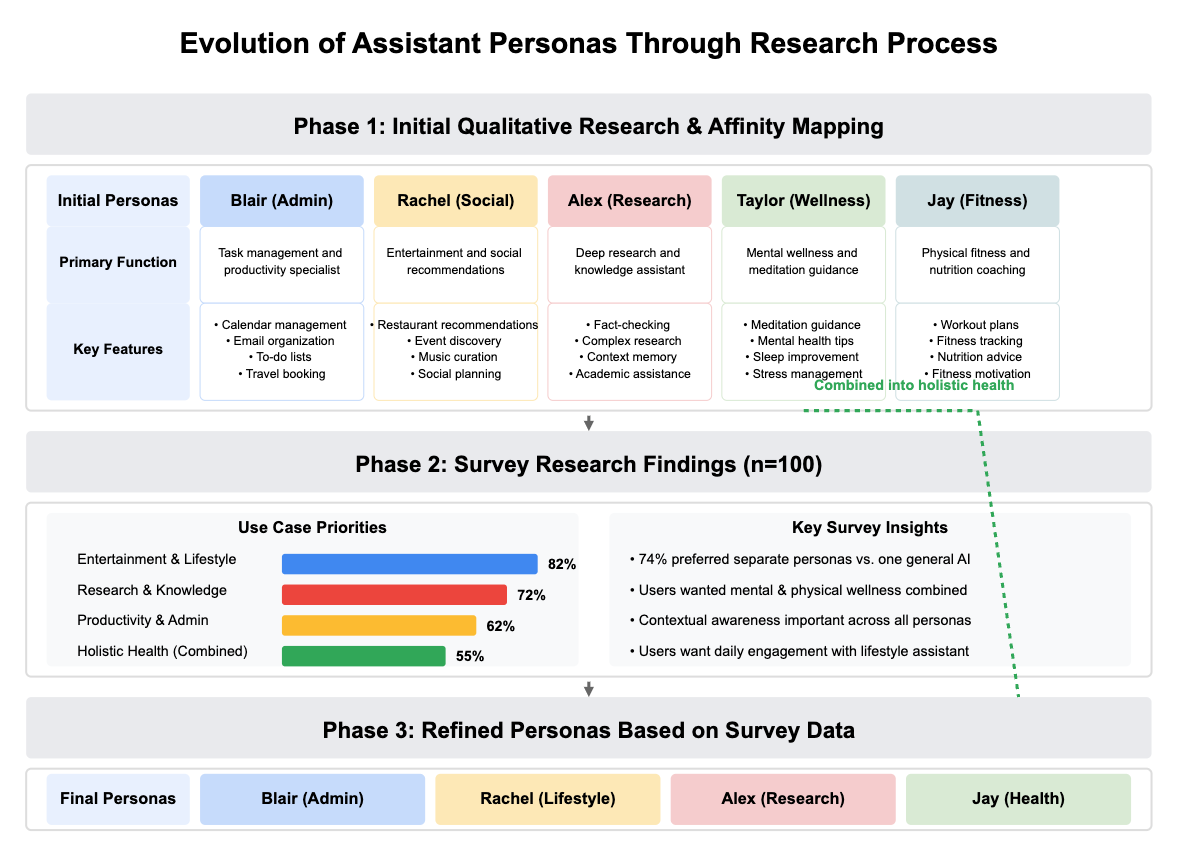

After synthesizing findings from the focus groups, I conducted a survey (n=100 participants) to validate key insights and refine the assistant personas.

Survey Design:

Explored multi-turn use cases including entertainment, mental health, fitness, and news.

Asked users to rank the most valuable assistant features.

Captured user preferences for persona-based vs. general AI assistants.

Key Findings:

Users overwhelmingly preferred persona-based assistants over a general AI.

Holistic health approach was more desirable—participants wanted a single assistant for both mental wellness & fitness.

➡️ This led to merging the initial Taylor (Wellness) and Jay (Fitness) personas into one: Jay (Health).

Deliverable:

A one-page report summarizing survey insights, including key charts and persona refinements.

Impact:

Presented survey findings at the beginning of an internal workshopping session with the Product & Design team.

Survey insights directly influenced the persona evolution, leading to the decision to merge wellness & fitness into a single assistant.

3. 1:1 Interviews & Concept Testing:

After defining the personas, I conducted concept testing (n=7 participants) to assess:

Overall usefulness, engagement, and excitement for each assistant.

Preferred conversational tone & personality for each persona.

Tradeoffs between excitement vs. expected frequency of use.

Key Findings:

📌 1. Users Were Dissatisfied with Alexa but Didn't Necessarily Want a General LLM Assistant

Users found Alexa frustrating but also didn’t want a broad AI that “tries to do everything”—specialized personas were more appealing.📌 2. Users Preferred a "Team" of Assistants, but Not Too Many

Users liked the idea of multiple assistants, but felt that too many personas could be overwhelming.

Rachel and Alex emerged as the most compelling assistants.

📌 3. Rachel Was the Best Choice for V1

Given Native Voice’s existing partnerships with iHeartRadio & TuneIn, Rachel was the best fit for launch because:

- Leverages existing brand integrations (music, events, entertainment).

- Simpler AI scope—doesn’t require complex multi-turn conversations like Alex.

- Strong engagement potential—used multiple times per day.Alex should be built next, but it requires:

- More challenging brand partnerships (Britannica, Google Search).

- More advanced AI capabilities (deep contextual conversations).