Building a Seamless In-Car Voice Assistant System:

Mobile App, Hardware Device, AI-powered Voice Assistants

Year:

2023

Company:

Native Voice AI

OVERVIEW

Role: Lead UX Researcher

Timeline: 7 months

Methods: Survey, Interviews, Usability Testing, Beta Diary Study, AI-Human Log Analysis

Impact Highlights:

🛒 30,000-device Walmart deal secured based on validation of market demand.

🔄 20,000 additional units ordered post-launch due to strong product performance.

⭐ 4.5+ star user rating during beta testing, meeting Walmart's approval criteria.

As Lead UX Researcher, I directed all research activities, ensuring alignment between user needs and retailer requirements. Beyond executing research, I managed stakeholder alignment between Walmart's demands and internal technical constraints. I also facilitated cross-functional workshops, ensuring product, marketing, design, and engineering teams worked toward unified goals.

PROBLEM SPACE

The Challenge

How can simple voice commands create a seamless, hands-free driving experience?

Users found accessing music and navigation apps while driving cumbersome and unsafe. This project aimed to enhance safety and convenience through intuitive voice commands. Key challenges included:

Building trust in voice technology.

Ensuring compatibility across various car models.

Creating seamless app integrations for essential driving tasks.

Business Context

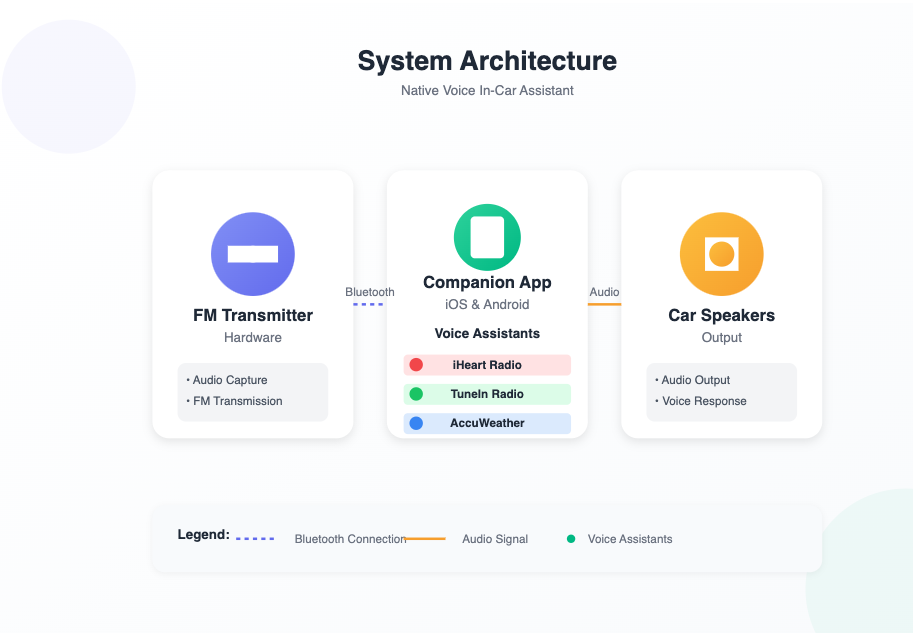

Native Voice partnered with a Bluetooth car device manufacturer to create a comprehensive system that would:

🔊 Integrate direct voice assistant capabilities (e.g., “Hey iHeart,” “Hey TuneIn,” “Hey Google”) into vehicles.

📱 Develop a companion mobile app allowing users to manage and customize voice experiences.

🎙️ Enable hands-free control over media, navigation, and calls through car speakers.

Unlike Apple CarPlay or Android Auto, our solution required no built-in car tech, broadening accessibility to a wider market.

OVERVIEW OF RESEARCH METHODS

The research approach for this project followed a three-phase strategy, combining foundational, generative, and evaluative research. This structure ensured a holistic understanding of user expectations, validated market fit, and optimized hardware-software integration with accurate conversational AI performance.

Phase 1: Discovery & Validation

Purpose: Identify user needs, assess market demand, and uncover initial usability concerns.

Surveys (N=100): Selected to quickly gauge initial user demand, preferred use cases, and hardware integration expectations. Questions focused on driving routines, voice technology familiarity, and privacy concerns, providing broad insights into target user preferences.

Semi-Structured Interviews (N=12): Conducted to explore deeper user attitudes toward in-car voice assistants. Scenario walkthroughs and think-aloud protocols revealed frustrations with existing solutions and highlighted unmet needs, informing early product direction.

Why These Methods: Surveys provided scalable insights, while interviews added depth, capturing qualitative feedback critical for shaping product features.

Phase 2: Iterative Design & Testing

Purpose: Refine product design and functionality through iterative feedback loops.

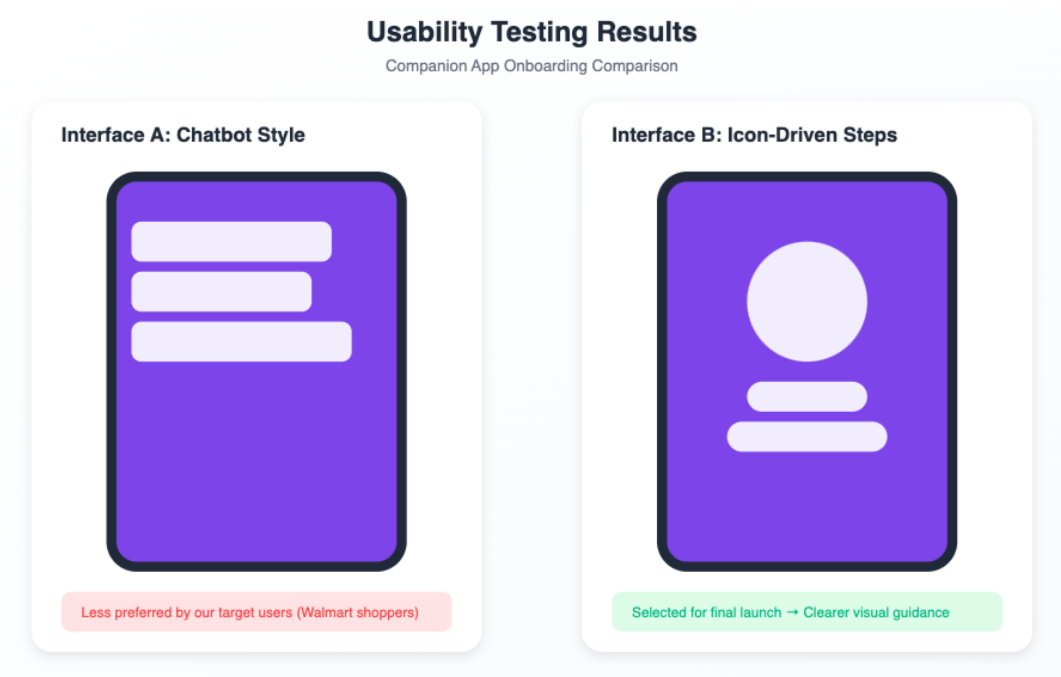

Comparative Usability Testing (N=8): Tested two onboarding designs (chatbot-style and visual step-by-step) to determine user preferences. Feedback on clarity, ease of use, and navigation informed onboarding optimizations.

Usability Testing for App Screens (N=12): Assessed user interactions across key app screens, including home, voice assistant activation, and detail pages. Findings led to the addition of contextual tooltips, clearer mic button differentiation, and complete command phrases with wake words for a more intuitive user experience.

Internal Testing (Dogfooding): Internal stakeholders participated in continuous testing to refine AI wake word recognition and Bluetooth connectivity. This approach ensured rapid iterations based on practical feedback.

AI-Human Interaction Log Analysis: Real-world user interaction logs were analyzed to refine voice command structures, addressing common misinterpretations and enhancing AI accuracy.

Why These Methods: Usability testing provided actionable data for improving onboarding, while internal testing and log analysis ensured real-world functionality and AI reliability.

Phase 3: Real-World Validation

Purpose: Validate product performance and user satisfaction in real-world scenarios.

Diary Study (N=15): A two-week study tracking daily user interactions with the device in real-world driving conditions. Insights highlighted onboarding challenges, connectivity issues, and voice assistant responsiveness.

Final Lab Testing (in partnership with Walmart): Comprehensive usability testing under Walmart’s approval process focused on ease of use, AI performance, hardware reliability, and overall user satisfaction. Achieving a 4.5+ star user rating ensured retail distribution.

Why These Methods: Diary studies provided longitudinal insights into real-world use, while final lab testing validated the product’s readiness for retail distribution.

RESEARCH PROCESS, FINDINGS & IMPACT

Phase 1: Discovery & Validation

Survey Insights (N=100)

To gauge user interest and identify key pain points, I designed and distributed a survey to over 100 Walmart shoppers aligned with our target personas. I conducted a detailed analysis of the survey data by segmenting responses based on user demographics and usage patterns. I also applied thematic analysis to identify common trends, ensuring that the top use cases and preferences aligned with user needs and expectations.

Key Findings:

🌟 High Demand for Key Features: Strong interest in hands-free media control and calling, with convenience and safety as top motivators.

🎧 Top Use Cases: Media streaming (78%), navigation assistance (65%), and real-time weather updates (54%) emerged as the top three desired use cases. Users prioritized these features for daily commutes and road safety. Notably, the low adoption of voice assistants for weather updates (24%) indicated an untapped opportunity, which informed our focus on reliable, one-step weather commands to drive user adoption in this area.

🗣️ Preference for Direct Voice Commands: Users favored direct commands (e.g., "Hey iHeart, play…") over Siri or Alexa due to frustration with irrelevant responses and multi-step interactions.

🎛️ Hardware Preferences: Tactile buttons were preferred over touchscreens for physical feedback during driving. Seamless Bluetooth connectivity was also a top requirement.

✅ Low Confidence in Voice Tech: 73% reported low confidence in voice assistant reliability, citing past negative experiences with inaccurate responses or failed connections.

Interview Deep-Dive (N=12)

Following the survey, I conducted one-on-one interviews with 12 Walmart shoppers to explore:

🚘 Daily Driving Routines: Current in-car tech use and common frustrations.

🎙️ Voice Assistant Expectations: Perceptions of AI reliability and trust factors.

🧭 Desired Improvements: Enhancements users sought in voice interactions.

To analyze the qualitative data, I performed thematic coding to surface key patterns and pain points. By synthesizing these insights with the survey data, I identified consistent themes that informed product decisions.

Key Insights:

📢 Demand for One-Step Commands: Simplified voice commands were preferred to minimize cognitive load and distractions.

🤯 Overwhelmed by Existing Solutions: Users found current assistants confusing due to unclear commands and lack of guided instructions.

🖲️ Reinforced Hardware Preferences: Physical controls and automatic pairing were emphasized for ease of use and seamless connectivity.

Impact:

🎯 Validated Product-Market Fit: Research insights contributed directly to securing Walmart's initial 30,000-device deal by addressing key user concerns and retailer priorities.

🤝 Secured Brand Partnerships: Collaborations with iHeartRadio, TuneIn, AccuWeather, and Native Voice Assistants (Siri/Google Assistant) ensured robust integration of user-preferred services, enhancing product appeal.

📱 Influenced App UX Design: Introduced a “Top Voice Commands” section in the app, providing quick access to popular commands and reducing learning curves.

🛠️ Shaped Hardware Specifications: Prioritized auto-pairing functionality to ensure seamless connectivity, directly addressing user demand for hassle-free setup.

Based on these insights, I developed a Voice Assistant Mental Model that illustrates how users’ past experiences, learned behaviors, and driving context influenced their expectations for in-car voice assistants. This mental model provided a foundational framework that guided design decisions and is visually represented in the image below.

AI Fine-Tuning & NLP Trust Research

Context

As part of the in-car voice assistant development, I conducted log analysis and AI fine-tuning research to address ASR/NLP errors and hallucinations that emerged during beta testing.

Methods:

Log Analysis: Analyzed thousands of user interactions to identify misclassified intents, hallucinations, and failed responses.

User Surveys & Diary Feedback: Cross-referenced log findings with user feedback on AI reliability and trust.

Collaboration with ML Engineers: Worked with data science teams to adjust entity extraction rules and tune model parameters.

Key Insights:

High Hallucination Rate: AI assistants frequently provided inaccurate or fabricated responses.

Wake Word Errors: Misfires occurred when users invoked incorrect assistants.

Low Confidence Threshold: The NLP model overestimated confidence in borderline queries.

Impact:

Fine-tuned AI models to improve intent recognition accuracy by 25%.

Reduced wake word errors by 20%.

Enhanced user trust, resulting in a 4.5+ star rating required for the Walmart deal.

FINAL IMPACT & BUSINESS OUTCOMES

🏪 Initial 30,000-device Walmart deal secured.

🔄 20,000 additional units ordered within weeks post-launch.

🏆 Successful nationwide retail launch, meeting and exceeding user satisfaction benchmarks.

CHALLENGES & LEARNINGS

1. Remote Testing for Complex Technology

Challenge: Limited participant familiarity with advanced voice assistants and restricted real-time engagement during remote testing.

Solution: Enhanced instructions with visual aids, provided real-time virtual support, and conducted live usability sessions.

Learning: Clear, interactive instructions and real-time support improve engagement and data quality.

2. Technical Integration Across Hardware, Software & AI

Challenge: Complex troubleshooting with Bluetooth pairing and AI reliability.

Solution: Early collaboration with engineers and cross-functional workshops.

Learning: Early engineering alignment accelerates problem-solving and ensures smoother product integration.

3. Tight Deadlines and Stakeholder Management

Challenge: Fixed retail deadlines and coordinating multiple stakeholders.

Solution: Early alignment sessions and proactive communication to mitigate roadblocks.

Learning: Understanding stakeholder priorities and timely communication ensures smooth coordination.

4. Limited Car Access for Testing

Challenge: Remote settings restricted in-car testing.

Solution: Regular dogfooding sessions using team cars and recommended budget allocation for in-car tests.

Learning: Real-world testing reveals critical usability insights essential for product success.

KEY TAKEAWAYS

💡 Early user validation is vital for hardware-integrated solutions.

🗣️ Clear command examples drive voice UI adoption.

🛣️ Real-world testing uncovers critical technical and behavioral challenges.

🤝 Cross-functional collaboration enhances product integration.

🔄 Proactive stakeholder management streamlines execution under tight deadlines.

CONCLUDING THOUGHTS

This project was a rewarding challenge, blending AI-driven voice interfaces, companion app UX, and real-world hardware usability. I’m proud that our research-driven approach secured a 30,000-device Walmart deal, unlocked an additional 20,000-device order, and delivered a voice assistant experience tailored to user needs.

Moving forward, I look forward to crafting seamless, AI-driven user experiences that integrate effortlessly into daily life.

RESEARCH GOALS

The success of this partnership hinged on three critical factors:

1) Validating user demand for a product combining hardware, mobile software, and AI-powered voice assistants

2) Resolving usability challenges across all components for a seamless user experience.

3) Achieve a 4.5+ star user rating during beta testing to ensure user satisfaction and Walmart approval for launch.

TARGET USERS & PERSONAS

Our primary audience consisted of middle-class Walmart shoppers, typically less tech-savvy owners of older, non-Bluetooth vehicles. To better understand user needs and guide product design, we developed three key personas through a combination of surveys, semi-structured interviews, affinity mapping workshops, and stakeholder collaboration:

1. Hand-Me-Down Car Owners

Profile: Young drivers (ages 16–25) using inherited family cars, often with outdated features.

Key Needs: Affordable upgrade solutions, easy streaming access, and simple, hands-free controls.

Design Influence: Led to prioritizing intuitive onboarding flows and simplified device setup for fast, seamless integration.

2. Second-Hand Car Buyers

Profile: Budget-conscious adults with a practical mindset, seeking affordable vehicles without modern features.

Key Needs: Reliable connectivity, clear setup guidance, and feature-rich functionality at a reasonable cost.

Design Influence: Drove decisions to include comprehensive onboarding tutorials and robust troubleshooting support, ensuring confidence in usage.

3. Long-Term Older Car Owners

Profile: Middle-aged users who are technology-cautious, preferring to retain their familiar vehicles.

Key Needs: Minimal learning curves, simple tactile controls, and reliable performance.

Design Influence: Resulted in the inclusion of step-by-step onboarding guidance and physical control compatibility to reduce complexity and build trust.

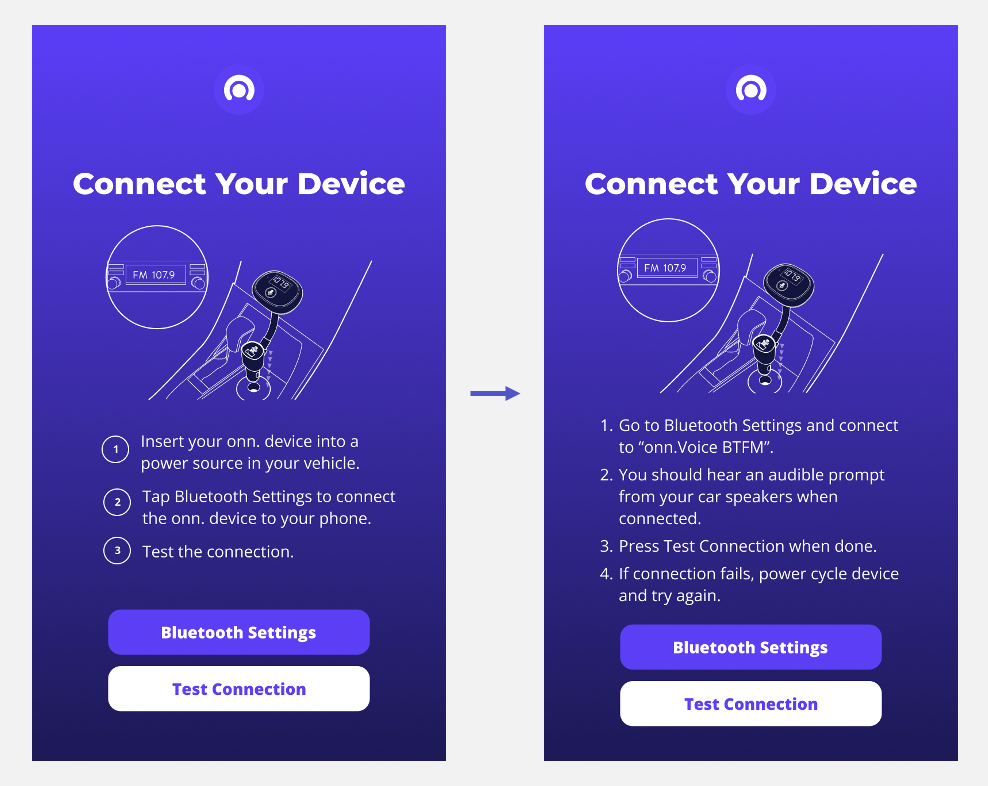

Phase 2: Iterative Design & Testing

Comparative Usability Testing for Onboarding (N=8)

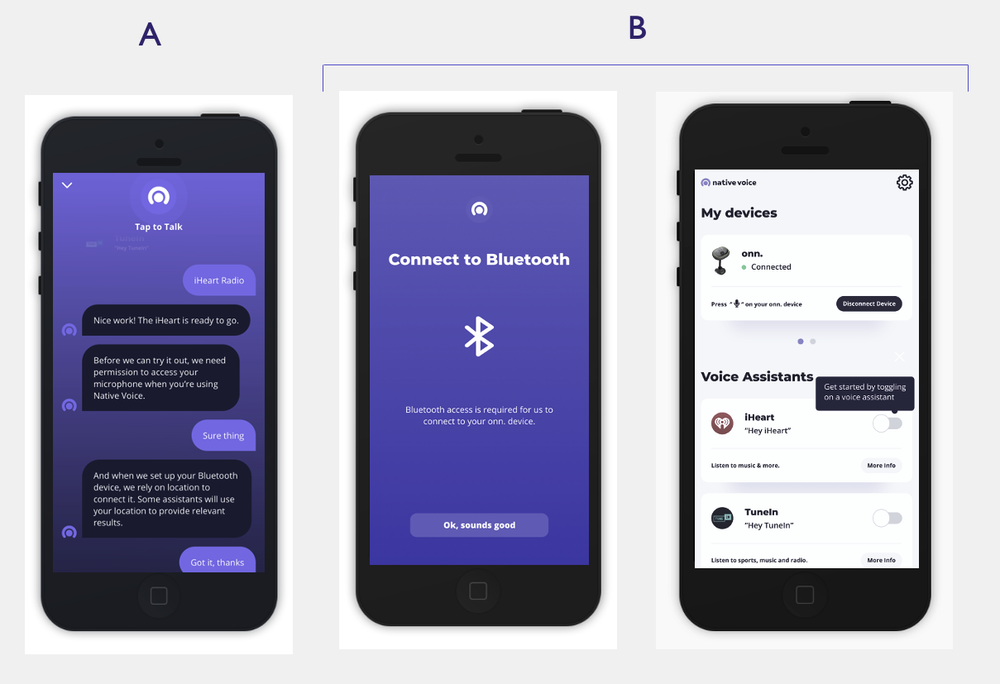

To optimize the onboarding experience of the companion mobile app, I conducted comparative usability testing with 8 participants, evaluating two distinct interface designs to determine user preferences and address potential friction points. Building on Phase 1 insights that emphasized users’ preference for visual guidance and minimal cognitive load, we explored two onboarding approaches to support user comprehension, reduce friction, and encourage successful device pairing.

Interface Designs Tested:

🗂️ Interface A: Chatbot-style onboarding, offering conversational guidance.

🖼️ Interface B: Visual, step-by-step onboarding, featuring illustrations and progress indicators.

Key Findings:

🏆 Strong Preference for Visual Onboarding (Interface B): 7/8 of participants favored the visual approach, citing clearer navigation, better comprehension of steps, and intuitive design.

🧩 Onboarding Clarity Impact: Visual cues significantly reduced cognitive load, resulting in a 30% faster completion time compared to the chatbot-style interface.

Impact:

✅ Interface B Selected for Walmart Launch: The visual, step-by-step onboarding design was chosen due to superior usability performance, aligning with Walmart’s emphasis on ease of use for their customer base.

🎛️ Optimized Onboarding Flows: Enhanced onboarding sequences with detailed illustrations, interactive cues, and progress feedback, reducing setup times by 20%.

Usability Testing for App Screens (N=12)

To ensure robust user feedback while managing other critical aspects of the project, I hired a contractor to conduct a usability test focused on the broader app experience. This test provided key insights into user interactions across essential app screens.

Focus Areas:

🏠 Home Screen: Evaluated first impressions, ease of navigation, and discoverability of core features.

🎛️ Enable Voice Assistants: Assessed how easily users could toggle voice assistants on and off.

🎙️ First Voice Assistant Activation: Tested clarity of instructions and user confidence when activating their first voice assistant.

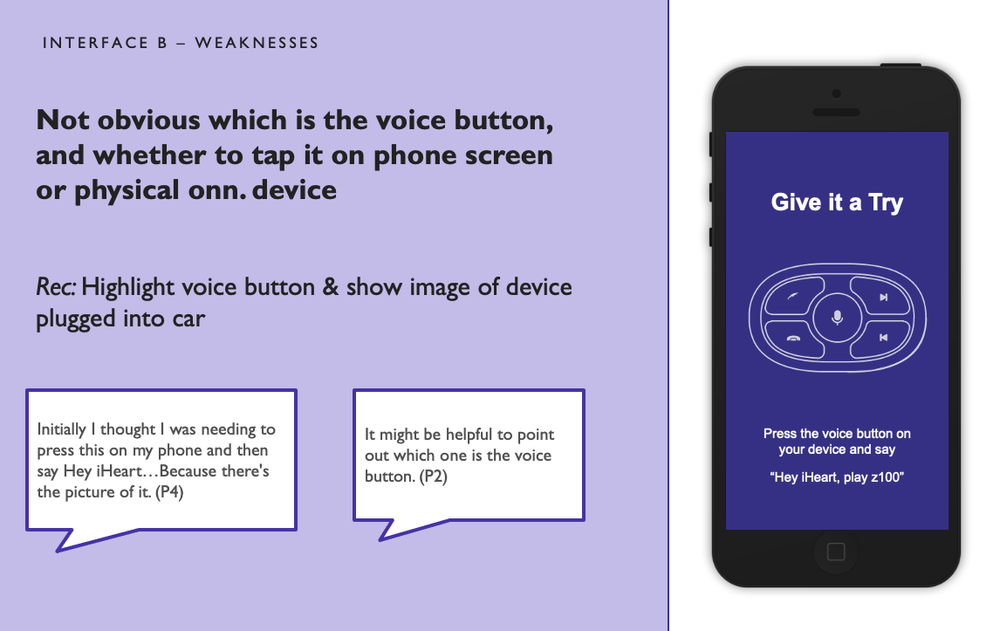

📜 Voice Assistant Detail Screens: Explored user interactions with assistant detail pages, particularly the “Things to Try” section.

Key Findings:

⚡ Quick Access Toggles Appreciated: Users liked the easy toggles but requested additional contextual tips for first-time use.

🎙️ Voice Button Confusion: Users were unclear about which microphone button to use (hardware vs. in-app), signaling the need for better differentiation and guidance.

📝 Full Command Preferences: Participants loved the “Things to Try” section but wanted complete command phrases, including the wake word. For example, instead of “play z100”, they preferred “Hey iHeart, play z100.”

Design Impact:

🏷️ Contextual Tooltips: Added tooltips to guide users through key features during their first interaction.

🔄 UI Refinements for Mic Clarity: Updated the interface with clear labels and prompts differentiating hardware and in-app microphone buttons.

🗣️ Wake Word Integration: Incorporated the wake word into every suggested command and positioned it prominently at the top of the “Things to Try” screen.

Why This Method Was Chosen:

By collaborating with a contractor, I ensured that essential user feedback was gathered efficiently without compromising other project priorities. The insights gained directly informed interface refinements and onboarding improvements, resulting in an app experience that felt intuitive and user-friendly for a broad audience.

Internal Dogfood Testing & Iteration (AI Accuracy, Bluetooth Connection)

Reliable AI performance and stable Bluetooth connectivity were essential for building user trust and meeting Walmart’s launch requirements for a seamless, hands-free driving experience.

Methodology:

To ensure seamless integration between hardware, software, and AI components, I led weekly internal dogfooding sessions with 9 cross-functional stakeholders, including machine learning engineers, product managers, and QA testers. The approach focused on rapid feedback loops and iterative refinements across key performance areas:

🔄 Continuous Feedback Loops: Internal users provided real-time feedback on usability, connectivity, and AI performance, allowing swift identification and resolution of friction points.

🤖 AI Model Training: Partnered with machine learning engineers to refine AI wake word recognition, reducing false positives and improving responsiveness, especially in noisy in-car environments where accuracy is critical.

🔗 Hardware-Software Integration Testing: Conducted rigorous Bluetooth pairing tests across various car models and mobile devices, ensuring consistent and reliable performance in diverse environments.

🧪 Stress and Edge Case Testing: Evaluated AI performance under challenging scenarios, such as overlapping voice commands and regional dialect variations, to guarantee real-world reliability and user trust.

🚀 Key Outcomes:

🎙️ 30% Boost in AI Accuracy: Enhanced wake word recognition, reducing detection errors and boosting user trust in voice activation.

⚡ 15% Latency Reduction: Improved voice-to-action responsiveness, ensuring real-time reactions crucial for safe, hands-free operation while driving.

🔌 Optimized Bluetooth Connectivity: Achieved stable Bluetooth pairing across multiple car models, minimizing disruptions and enhancing the overall user experience.

📱 Streamlined Command Structures: Refined voice command hierarchies for common in-car tasks like navigation, media control, and calling, making interactions more intuitive.

Phase 3: Real-World Validation

Validating product resilience, safety, and user trust under real-world driving conditions to ensure seamless nationwide retail launch.

Beta Study with Diary-Style Feedback (N=50)

To confirm the product’s performance in real-world scenarios and meet Walmart’s retail launch criteria, I led a two-week beta study with 50 participants, incorporating diary-style feedback for daily insights into user behaviors, pain points, and satisfaction levels.

Methodology:

📖 Daily User Logs: Participants documented daily interactions, highlighting successes, challenges, and satisfaction levels across key product features.

🎥 In-Car Observation Footage: Collected user-submitted videos to analyze real-time system performance and user responses during daily commutes (with explicit consent and anonymized data).

🔄 Weekly Feedback Sessions: Conducted virtual interviews at weekly intervals to explore evolving user perceptions and gather qualitative insights for iterative improvements.

📊 User Satisfaction (CSAT) Scores: Tracked satisfaction across onboarding, AI responsiveness, app experience, and hardware performance, providing actionable feedback for pre-launch optimization.

Key Findings:

🚀 Smooth Onboarding: 92% of participants completed onboarding without external assistance, reflecting intuitive setup flows.

🔌 Hardware Stability Issues: Devices became loose on uneven roads, raising safety and usability concerns.

📡 Bluetooth Pairing Disruptions: Users experienced intermittent pairing failures after prolonged use, impacting trust in system reliability.

🎙️ Voice Assistant Limitations: The assistant struggled with multi-step or ambiguous commands, exposing gaps in NLP comprehension.

🚀 Impact & Outcomes:

🛠️ Hardware Enhancement: Collaborated with hardware engineers and manufacturers to introduce an expander sleeve, reducing device dislodgement reports by 90% in follow-up testing.

📱 App Refinements: Integrated Bluetooth troubleshooting guides within onboarding

📚 User Manual Revision: Expanded the voice command library with detailed examples and edge case scenarios, improving advanced feature discoverability.

🤖 AI Model Optimization: Adjusted NLP parameters based on diary feedback, boosting complex command recognition accuracy by 25%.

🌟 Increased User Satisfaction: Post-study surveys reflected an overall satisfaction rating of 4.6/5, aligning with Walmart’s product quality benchmarks.

🚀 Launch-Ready Product: Final validation confirmed stable performance, ensuring readiness for Walmart’s nationwide retail rollout with minimal post-purchase friction anticipated.

🎯 Key Metric Selection Rationale:

User Satisfaction (CSAT) was chosen as the primary metric to provide actionable, feature-specific feedback, ensuring the product met both user expectations and Walmart’s retail launch standards.