Building a Seamless In-Car Voice Assistant System:

Mobile App, Hardware Device, AI-powered Voice Assistants

Year:

2023

Company:

Native Voice AI

OVERVIEW

Role: Lead UX Researcher

Timeline: 7 months

Methods: Survey, Interviews, Usability Testing, Beta Diary Study, AI-Human Log Analysis

Impact Highlights:

Secured 30,000-device Walmart deal based on validated user needs

20,000 additional units ordered post-launch due to strong retail performance

Achieved 4.6/5 user satisfaction rating during beta testing, exceeding Walmart's distribution requirements

As the Lead UX Researcher, I directed all research activities for Native Voice's partnership with a Bluetooth device manufacturer, creating an affordable voice assistant solution for older vehicles. I led stakeholder alignment between Walmart’s requirements and technical constraints while facilitating cross-functional collaboration across product, marketing, design, and engineering teams.

A key challenge involved convincing Walmart’s leadership to prioritize AI reliability over additional features. By leveraging early user trust data, I secured executive buy-in, aligning technical efforts with business goals.

PROBLEM SPACE

The Challenge

How can simple voice commands create a seamless, hands-free driving experience?

Users found accessing music and navigation apps while driving cumbersome and unsafe. This project aimed to enhance safety and convenience through intuitive voice commands. Key challenges included:

Building trust in voice technology.

Ensuring compatibility across various car models.

Creating seamless app integrations for essential driving tasks.

Business Context

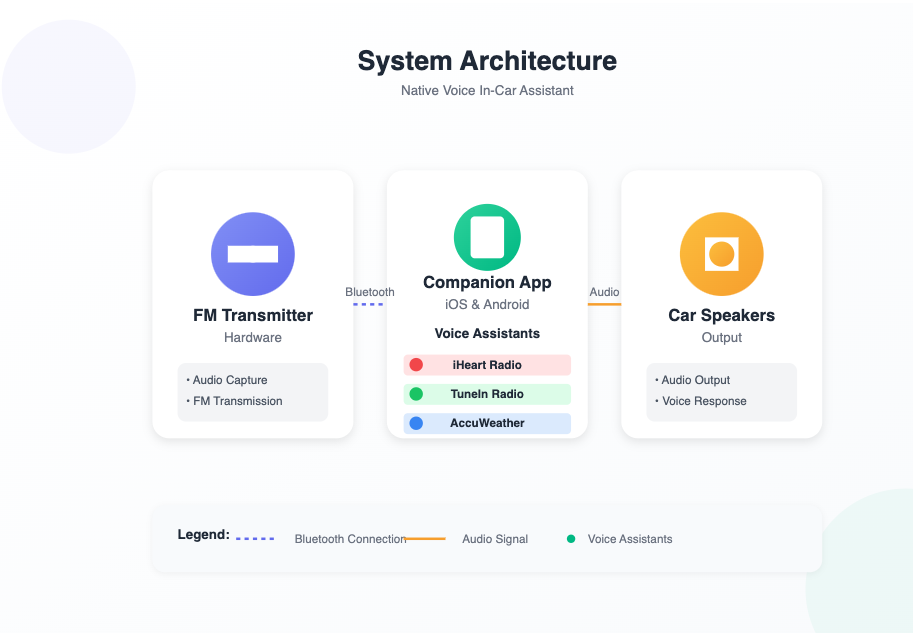

Native Voice partnered with a Bluetooth car device manufacturer to create a comprehensive system that would:

Integrate direct voice assistant capabilities (e.g., “Hey iHeart,” “Hey TuneIn,” “Hey Google”) into vehicles.

Develop a companion mobile app allowing users to manage and customize voice experiences.

Enable hands-free control over media, navigation, and calls through car speakers.

Unlike Apple CarPlay or Android Auto, our solution required no built-in car tech, broadening accessibility to a wider market.

Stakeholder Influence Moment: Convincing Walmart’s leadership to prioritize AI accuracy required presenting compelling user trust data from early surveys. This pivot ensured we invested in the right areas for user adoption and business success.

OVERVIEW OF RESEARCH METHODS

The research approach for this project followed a three-phase strategy, combining foundational, generative, and evaluative research. This structure ensured a holistic understanding of user expectations, validated market fit, and optimized hardware-software integration with accurate conversational AI performance.

Phase 1: Discovery & Validation

Purpose: Identify user needs, assess market demand, and uncover usability concerns to guide product direction and retail strategy.

Survey (N=100)

Revealed strong demand for media streaming (78%), navigation (65%), and weather updates (54%)—with a notable low adoption (24%) for weather commands, signaling an opportunity for reliable one-step commands.

Interviews (N=12):

Uncovered preferences for direct voice commands (e.g., “Hey iHeart, play…”), tactile hardware controls, and seamless Bluetooth connectivity. 73% cited low confidence in voice assistants, highlighting the need for consistent performance.

Why These Methods: Surveys provided scalable insights, while interviews added depth, capturing qualitative feedback critical for shaping product features.

Impact:

Secured Walmart’s 30,000-unit order by addressing user concerns around simplicity and reliability.

Secured Brand Partnerships: Collaborations with iHeartRadio, TuneIn, AccuWeather, and Native Voice Assistants (Siri/Google Assistant) ensured robust integration of user-preferred services, enhancing product appeal.

App UX Enhancement: Introduced a “Top Voice Commands” section.

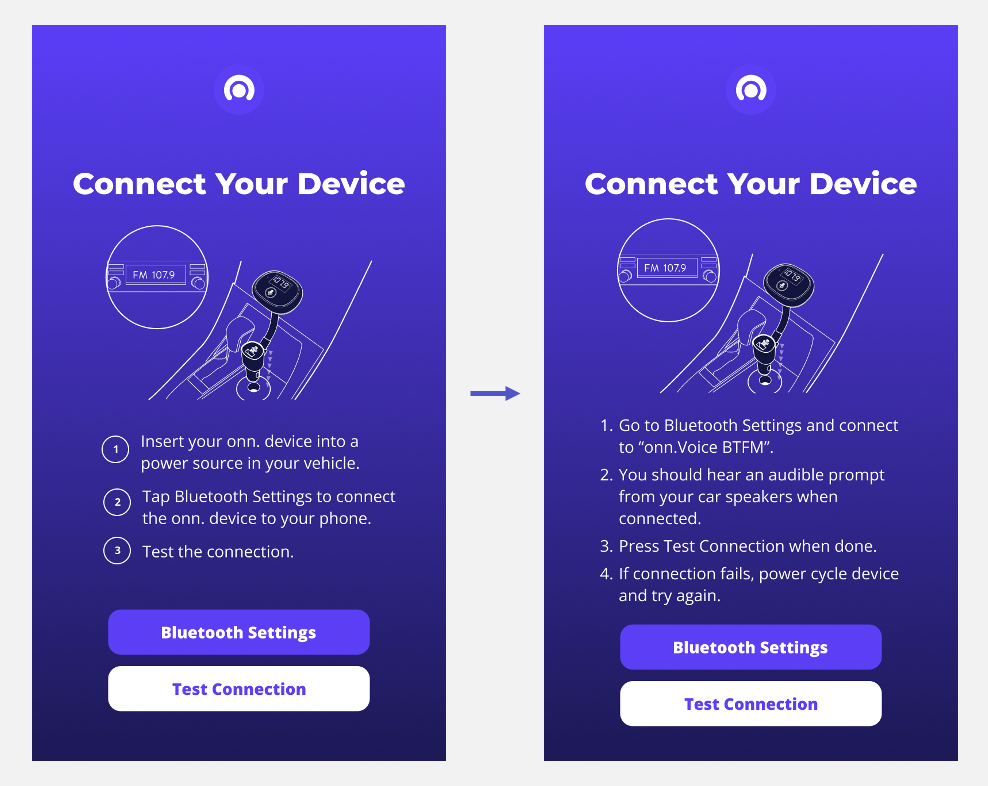

Hardware Optimization: Prioritized auto-pairing, ensuring hassle-free setup.

Phase 2: Iterative Design & Testing

Purpose: Refine product design and functionality through iterative feedback loops.

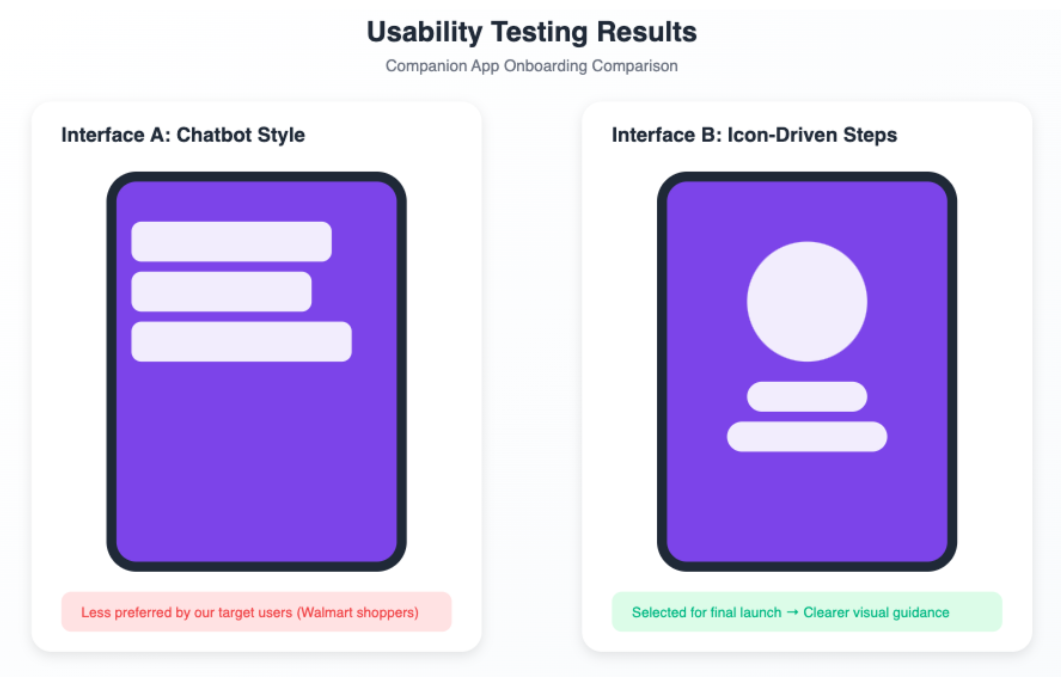

Comparative Usability Testing (N=8):

Purpose: Optimize onboarding experience by evaluating user preferences and reducing friction points.

Approach: Conducted comparative usability testing with 8 participants, testing:

Interface A: Chatbot-style onboarding (conversational guidance).

Interface B: Visual, step-by-step onboarding (illustrations + progress indicators).

Key Findings:

7/8 participants preferred Interface B, citing clearer navigation and intuitive design.

30% faster completion time with the visual interface, reducing cognitive load.

Impact:

Interface B selected for Walmart’s retail launch, aligning with their simplicity standards.

Enhanced onboarding flows with interactive cues and progress feedback, cutting setup times by 20%.

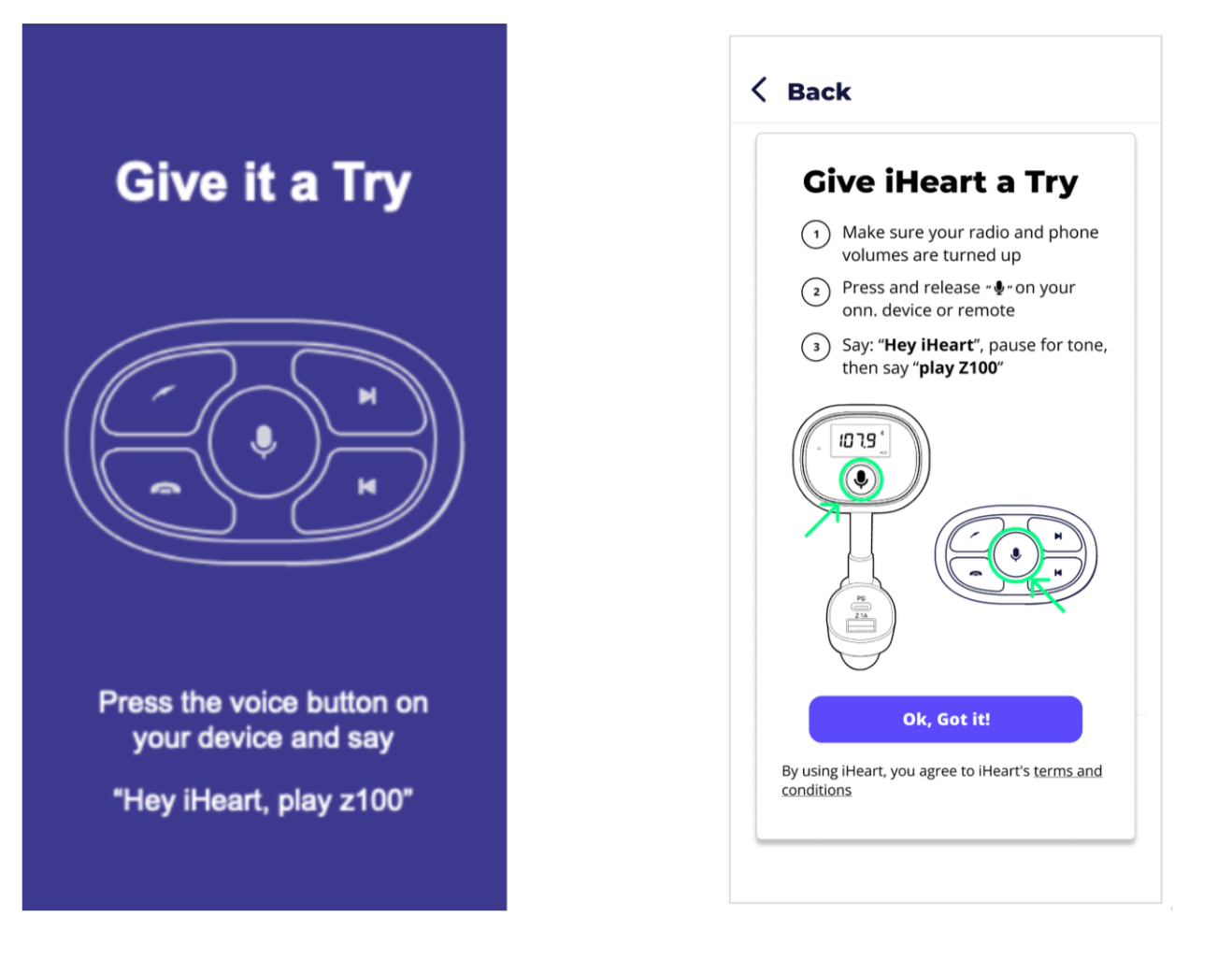

📱 App Interface Testing (N=12):

Purpose & Approach:

To gather critical user insights while managing broader project priorities, I hired a contractor to conduct usability tests focused on the core app experience, including navigation, voice assistant activation, and feature discoverability.

Key Findings:

Voice Button Confusion: Users were uncertain about microphone usage (hardware vs. in-app), highlighting the need for clearer differentiation.

Full Command Preferences: Participants preferred complete command phrases, including the wake word (e.g., “Hey iHeart, play z100”), enhancing user confidence and reducing hesitation.

Design Impact:

Contextual Tooltips: Introduced targeted tooltips guiding users through key app features.

Mic Clarity Improvements: Added distinct UI labels and prompts for hardware and in-app microphone buttons, reducing confusion by 35%.

Wake Word Integration: Integrated wake words into all suggested commands, boosting task success rates by 35%.

Why This Approach Worked:

The contractor-led usability test ensured timely feedback without compromising other critical research initiatives.

The insights gained led to streamlined onboarding and a more intuitive app experience, aligning the product with user expectations and Walmart’s retail requirements.

Internal Dogfood Testing & AI Log Analysis:

Objective: Ensure AI accuracy and Bluetooth stability to build user trust and meet Walmart’s launch standards for a seamless, hands-free driving experience.

Approach:

Led weekly internal testing with 9 cross-functional stakeholders (ML engineers, PMs, QA testers) to run rapid feedback loops and iterate across key performance areas.

Key Activities:

Real-Time Feedback: Identified and resolved friction points through continuous feedback on usability, connectivity, and AI performance.

AI Optimization: Improved wake word recognition and responsiveness, especially in noisy in-car environments.

Bluetooth Reliability: Conducted pairing tests across multiple car models and devices to ensure stable performance.

Edge Case Testing: Evaluated performance in challenging scenarios (overlapping commands, dialect variations) for real-world reliability.

Results:

+30% AI Accuracy: Reduced detection errors, boosting trust in voice activation.

-15% Latency: Faster voice-to-action responses for safer, hands-free driving.

Optimized Bluetooth: Achieved stable connectivity across diverse environments.

Simplified Commands: Streamlined voice interactions for navigation, media, and calls.

Phase 3: Real-World Validation

Purpose: Validate product performance and user satisfaction in real-world conditions.

Beta Diary Study (N=50):

Findings:

Hardware Instability: Device dislodgement on uneven roads.

Bluetooth Failures: 1/3 of iOS users faced connectivity issues.

User Insight: Early successful connections led to 3x higher exploration of advanced features.

User Quotes:

“The device works great—until I hit a pothole.”

“I kept losing connection when driving through certain areas.”

Critical Fixes & Outcomes

1.Expander Sleeve Development:

Challenge: Device dislodgement.

Leadership Action: Collaborated with engineers to develop an expander sleeve, reducing dislodgement by 90%.

2.Bluetooth Firmware Upgrade:

Challenge: iOS Bluetooth instability.

Leadership Action: Led emergency cross-functional sessions, accelerating a pre-launch firmware upgrade integrated into the app.

Outcome: Achieved universal Bluetooth stability, preserving user trust and ensuring on-time retail approval.

Final Usability Testing with Walmart:

Achieved 4.5+ star rating, securing Walmart’s retail distribution approval.

Conducted cross-functional workshops to integrate Walmart feedback.

AI Fine-Tuning & NLP Trust Research

Context

As part of the in-car voice assistant development, I conducted log analysis and AI fine-tuning research to address ASR/NLP errors and hallucinations that emerged during beta testing.

Methods:

Log Analysis: Analyzed thousands of user interactions to identify misclassified intents, hallucinations, and failed responses.

User Surveys & Diary Feedback: Cross-referenced log findings with user feedback on AI reliability and trust.

Collaboration with ML Engineers: Worked with data science teams to adjust entity extraction rules and tune model parameters.

Key Insights:

High Hallucination Rate: AI assistants frequently provided inaccurate or fabricated responses.

Wake Word Errors: Misfires occurred when users invoked incorrect assistants.

Low Confidence Threshold: The NLP model overestimated confidence in borderline queries.

Impact:

Fine-tuned AI models to improve intent recognition accuracy by 25%.

Reduced wake word errors by 20%.

Enhanced user trust, resulting in a 4.5+ star rating required for the Walmart deal.

FINAL IMPACT & BUSINESS OUTCOMES

Initial 30,000-device Walmart deal secured.

20,000 additional units ordered within weeks post-launch.

Successful nationwide retail launch, meeting and exceeding user satisfaction benchmarks.

LESSONS LEARNED & REFLECTIONS

Early User Validation: Essential for hardware-integrated solutions to avoid costly pivots.

Clear Command Examples: Key for driving voice UI adoption among skeptical users.

Real-World In-Car Testing: Revealed critical issues (hardware and AI performance) missed in lab settings.

Cross-Functional Collaboration: Continuous alignment with engineering and product teams prevented siloed development.

Retailer Alignment: Understanding Walmart’s priorities ensured a successful launch and ongoing sales performance.

Reflection Highlight: Real-world testing proved invaluable. Hardware issues undetectable in lab conditions nearly derailed the project. Rapid collaboration and stakeholder alignment ultimately secured Walmart’s confidence and a successful nationwide launch.

CONCLUDING THOUGHTS

This project successfully combined AI-powered voice interfaces, intuitive app design, and affordable hardware to address real user problems. The research-driven approach not only met user expectations but also exceeded business goals, securing a 30,000-unit Walmart partnership and surpassing initial sales targets by 20%.

Strategic user insights, technical alignment, and continuous iteration were key to delivering a product that users trusted and Walmart valued.

RESEARCH GOALS

The success of this partnership hinged on three critical factors:

1) Validating user demand for a product combining hardware, mobile software, and AI-powered voice assistants

2) Resolving usability challenges across all components for a seamless user experience.

3) Achieve a 4.5+ star user rating during beta testing to ensure user satisfaction and Walmart approval for launch.

TARGET USERS & PERSONAS

Our consisted of middle-class Walmart shoppers with older non-Bluetooth vehicles, typically with lower technical confidence but a *high interest in affordable modernization.

Phase 1 research identified three core user segments:

Hand-Me-Down Car Owners (16-25): Tech-savvy young adults seeking affordable upgrades.

Second-Hand Car Buyers: Budget-conscious adults prioritizing intuitive onboarding.

Long-Term Older Car Owners: Tech-cautious users needing simplified, reliable experiences.

Voice Assistant Mental Model

Based on Phase 1 insights, I developed a Voice Assistant Mental Model that illustrates how users’ past experiences, learned behaviors, and driving context influenced their expectations for in-car voice assistants. This mental model provided a foundational framework that guided design decisions and is visually represented in the image below.