Sauce & Slice

Pizzeria

Brand identity development and website redesign for family-owned and longstanding Brooklyn-based pizzeria.

Year

2024

Client

Sauce & Slice

Overview

Role: Sole UX Researcher

Time Frame: 6 months

Company: Native Voice AI

Problem Statement

Accessing music or navigation apps while driving is cumbersome, frustrating, and unsafe.

What if simple voice commands could make this experience seamless and hands-free?

Context

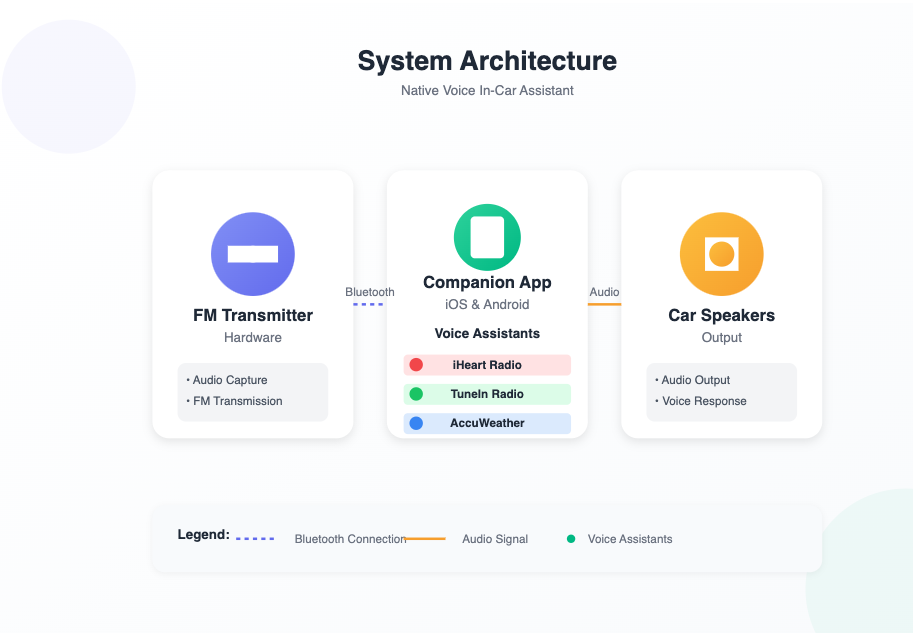

Native Voice explored a partnership with a Bluetooth car device manufacturer to integrate voice assistant capabilities (e.g., "Hey iHeart," "Hey TuneIn," "Hey Google") directly into vehicles. As part of this initiative, Native Voice would develop a mobile app to house the voice assistants, enabling users to manage and customize their voice experiences. The device and app together would allow users to control media, navigation, and other tasks via voice commands through car speakers.

The research had two critical goals:

Validate user demand for this product before investing development resources.

Identify and resolve usability challenges across hardware, mobile app, and voice assistant interactions to ensure a successful retail launch.

Target Users & Personas

Our primary audience was middle-class Walmart shoppers, typically less tech-savvy owners of older, non-Bluetooth vehicles, including:

Hand-Me-Down Car Owners (Ages 16-25): Young drivers using older family cars.

Second-Hand Car Buyers: Individuals purchasing used cars without modern features.

Long-Term Older Car Owners: Committed owners of non-Bluetooth vehicles.

Secondary Market: Tech enthusiasts seeking enhanced voice assistant functionality even in Bluetooth-equipped vehicles.

Understanding this audience shaped every aspect of the research, from usability testing to onboarding design.

Business Stakes:

Retail Distribution Partner: Walmart

Beta Goal: Achieve a 4.5+ star user rating before Walmart would approve the launch.

Result: Successfully met the rating requirement → Secured an initial 30,000-device deal.

Post-Launch Success: Due to strong performance, Walmart signed an additional 20,000-device deal within weeks of launch.

Research Methods

Over 6 months, I conducted foundational, generative, and evaluative research across multiple methodologies:

Survey & Interviews: Gather insights into user expectations, use cases, and interactions with the device and mobile app.

Comparative Usability Testing: Optimize the UI design for the companion mobile app by benchmarking against similar products.

Dogfooding & Internal Testing: Collect iterative feedback from internal users on a rolling basis to train the AI model and identify and address voice assistant accuracy issues and usability issues across hardware and app early.

Diary Usability Study & Log Analysis: Evaluate real-world driving experiences with both the device and mobile app through long-term user feedback and behavioral data.

Final Lab Test (in partnership with Walmart): Conduct a comprehensive usability evaluation to secure retailer approval before launch.

Key Findings & Impact

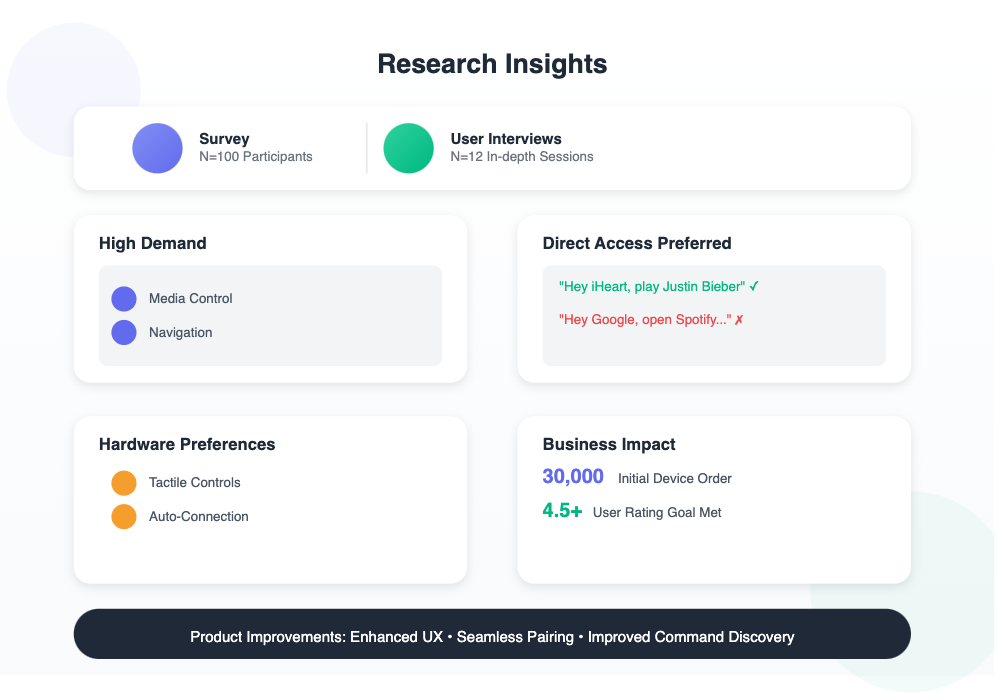

1. Survey & Interviews – Validating Demand & Identifying Use Cases

I conducted a survey (N=100) and 1:1 user interviews (N=12) to explore driving habits, expectations for voice assistants, and pain points.

Key Insights:

High Demand for In-Car Voice Assistants: Users were excited about the product, particularly for hands-free media control, navigation, weather updates, and calling.

Preference for Direct Assistant Access: Users wanted one-step voice commands (e.g., “Hey iHeart, play Justin Bieber”) instead of needing to go through their phone’s assistant (e.g., “Hey Google, open Spotify”). Users felt existing multipurpose VAs (Siri, Alexa) were overwhelming due to unclear constraints. They wanted examples of commands to guide interactions.

Hardware Preferences: Users preferred a tactile button on the device over a touchscreen interface and expected automatic Bluetooth connection upon entering the car.

Learning Curve Challenge: They wanted examples of commands to guide interactions.

Impact:

Research confirmed strong product-market fit, supporting the Walmart partnership and securing the initial 30,000-device deal.

Insights directly influenced companion app UX – We introduced a “Top Voice Commands” screen for each assistant to reduce confusion.

Findings informed hardware design – Manufacturers adjusted connectivity settings for seamless auto-pairing.

2. Comparative Usability Testing – Optimizing the Companion App Onboarding UX

I led two rounds of usability testing (N=12) to evaluate two interface designs for the companion app:

Interface A → Chatbot-style onboarding

Interface B → Icon-driven step-by-step onboarding

Key Insights:

Our target users—who were less tech-savvy Walmart shoppers—found visual, step-by-step guidance clearer and easier to navigate than the chatbot approach.

Confusion with Voice Button Placement: Some users struggled to locate the mic button, unsure whether to press the physical device or the app’s interface.

Impact:

Led to final selection of Interface B for the Walmart launch.

UI adjustments improved voice interaction clarity (e.g., refining “Press the Mic” instructions).

3. Diary Study & Log Analysis – Real-World Driving Usability

To assess real-world performance, I conducted a 2-week diary study with 15 participants using the device in their cars.

Key Insights:

Easy Setup: Users found onboarding smooth and intuitive.

Hardware Issues: Some devices loosened from the socket during use.

Bluetooth Frustrations: Occasional connectivity issues disrupted use.

Voice Assistant Limitations: Some commands failed due to unclear phrasing or unrecognized queries.

Impact:

Product Fixes Before Launch:

Hardware Update → Manufacturers created an expander sleeve to prevent device looseness.

App Enhancement → Added troubleshooting tips for Bluetooth connectivity.

User Manual Update → Expanded voice command examples for better discoverability.

AI Fine-Tuning & NLP Trust Research

Context

As part of the in-car voice assistant development, I conducted log analysis and AI fine-tuning research to address ASR/NLP errors and hallucinations that emerged during beta testing.

Methods:

Log Analysis: Analyzed thousands of user interactions to identify misclassified intents, hallucinations, and failed responses.

User Surveys & Diary Feedback: Cross-referenced log findings with user feedback on AI reliability and trust.

Collaboration with ML Engineers: Worked with data science teams to adjust entity extraction rules and tune model parameters.

Key Insights:

High Hallucination Rate: AI assistants frequently provided inaccurate or fabricated responses.

Wake Word Errors: Misfires occurred when users invoked incorrect assistants.

Low Confidence Threshold: The NLP model overestimated confidence in borderline queries.

Impact:

Fine-tuned AI models to improve intent recognition accuracy by 25%.

Reduced wake word errors by 20%.

Enhanced user trust, resulting in a 4.5+ star rating required for the Walmart deal.

Final Impact & Business Outcomes

Beta Goal Achieved: Met 4.5+ star rating requirement for Walmart.

Secured Initial 30,000-Device Deal.

Post-Launch Expansion: Due to product success, Walmart ordered 20,000 more devices within weeks.

Influenced Product Roadmap: Research insights led to hardware, app, and documentation improvements.

Challenges & Learnings

Remote Testing for Complex Tech:

Users were unfamiliar with advanced voice assistant concepts.

Solution: Improved test instructions and real-time support for diary study participants.

Technical Integration Across Hardware, Software & AI:

Bluetooth pairing & voice recognition created complex troubleshooting challenges.

Solution: Early collaboration with engineers improved issue resolution.

Tight Deadlines with Retail Constraints:

Fixed launch deadlines with hardware limitations required fast execution.

Solution: Clear stakeholder alignment and proactive communication ensured smooth coordination.

Conclusion

This project was a rewarding challenge, blending AI-driven voice interfaces, companion app UX, and real-world hardware usability. I enjoyed solving complex, cross-functional problems and learned the power of aligning research with business goals.

I’m especially proud that our research-driven approach secured a 30,000-device deal with Walmart, unlocked an additional 20,000-device order, and created a voice assistant experience tailored to our users' needs.

I look forward to continuing to craft seamless, AI-driven user experiences that integrate into daily life.

"We're far from tech-savvy, and Adri gave us valuable guidance on our shop's much-deserved digital makeover."

— Chef Randy